Governance for AI agents extends beyond the EU. In the United States, state-level legislation is emerging; for example, Texas enacted the Texas Responsible AI Governance Act (TRAIGA) in June 2025, which imposes categorical restrictions on AI development and deployment for purposes like behavioral manipulation, unlawful discrimination, and the creation of child pornography or deepfakes, with the law taking effect on January 1, 2026. This reflects a growing trend of governments recognizing the need to regulate AI agents, even as technological advancements often outpace regulatory development.

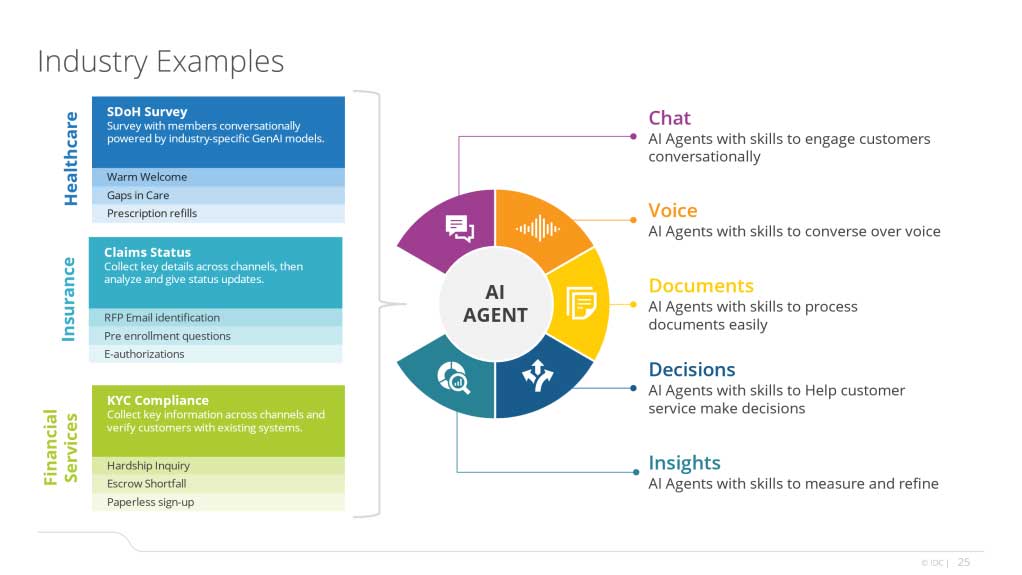

Effective governance requires a multidisciplinary approach, involving stakeholders from technology, law, ethics, and business. Key practices include forming cross-functional governance committees, implementing automated monitoring tools to assess agent activities against regulations, and using modern data catalogs to ensure data lineage, quality, and compliance. These practices are crucial for managing the inherent complexity and unpredictability of agentic systems, which can pose cybersecurity risks through poorly governed APIs and create challenges due to ambiguous or contradictory compliance requirements.

The legal framework for AI agents is also being shaped by the concept of “risky agents without intentions,” where the law holds the organizations that deploy AI to standards of reasonable care and risk reduction, rather than attributing intent to the AI itself. This approach emphasizes that the responsibility lies with the human actors who design, maintain, and implement the technology. Furthermore, the application of agency law and theory highlights challenges like information asymmetry and discretionary authority, suggesting that conventional solutions like monitoring may be insufficient for agents operating at high speed and scale. Therefore, new technical and legal infrastructure is needed to support governance principles of inclusivity, visibility, and liability.